Deep Learning-Based Food Image Classification Using Enhanced CNN Architecture and Data Augmentation Techniques

Main Article Content

Abstract

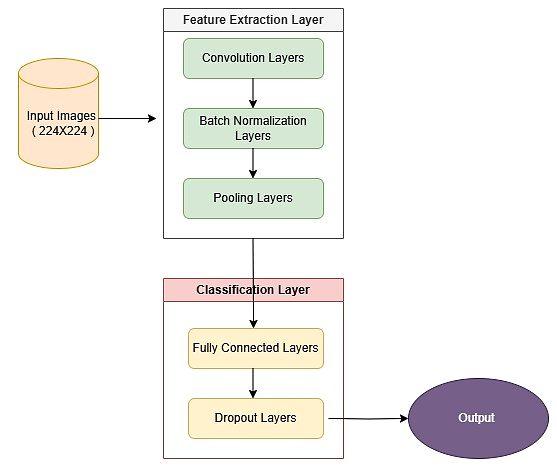

Automated food image classification has emerged as a critical research area due to its applications in dietary monitoring, calorie estimation, and personalized nutrition. However, the variability in food appearances, including high intra-class variability and inter-class similarity, poses significant challenges. This study proposes an optimized deep learning-based solution using a custom convolutional neural network (CNN) architecture to address these challenges. The model incorporates advanced techniques such as batch normalization, dropout, and data augmentation to improve generalization and mitigate overfitting. The proposed model was evaluated on the widely used Food-101 dataset, consisting of over 100,000 images across 101 food categories. The images were preprocessed through resizing, normalization, and data augmentation to enhance diversity and robustness. The model was trained using the Adam optimizer with a learning rate of 0.001, and its performance was assessed using accuracy, precision, recall, and F1-score metrics. Results indicate that the proposed model achieved an accuracy of 93.2%, outperforming benchmark architectures such as VGG-16 (87.6%) and ResNet-50 (91.0%). It also demonstrated a balanced performance with a precision of 92.4%, recall of 93.1%, and an F1-score of 92.7%. These results underscore the effectiveness of the proposed architecture in capturing complex visual features inherent in food images. While the model performs exceptionally well, challenges remain in classifying visually similar food categories. Future work aims to address these limitations by integrating attention mechanisms and multi-modal data to enhance classification accuracy and real-world applicability. This research contributes to advancing the field of food image classification with robust and scalable solutions

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

IJCERT Policy:

The published work presented in this paper is licensed under the Creative Commons Attribution 4.0 International (CC BY 4.0) license. This means that the content of this paper can be shared, copied, and redistributed in any medium or format, as long as the original author is properly attributed. Additionally, any derivative works based on this paper must also be licensed under the same terms. This licensing agreement allows for broad dissemination and use of the work while maintaining the author's rights and recognition.

By submitting this paper to IJCERT, the author(s) agree to these licensing terms and confirm that the work is original and does not infringe on any third-party copyright or intellectual property rights.

References

S. J. Gami, M. Sharma, A. B. Bhatia, B. Bhatia, and P. Whig, "Artificial Intelligence for Dietary Management: Transforming Nutrition Through Intelligent Systems," in Nutrition Controversies and Advances in Autoimmune Disease, IGI Global, 2024, pp. 276–307.

E. Aguilar, B. Nagarajan, R. Khatun, M. Bolaños, and P. Radeva, "Uncertainty modeling and deep learning applied to food image analysis," in Biomedical Engineering Systems and Technologies, Cham: Springer International Publishing, 2021, pp. 3–16.

T. Addisalem, "Developing an automatic shiro flour variety recognition model using a combined features of CNN and GLCM," Doctoral Dissertation, 2022.

B. B. Traore, B. Kamsu-Foguem, and F. Tangara, "Deep convolution neural network for image recognition," Ecological Informatics, vol. 48, pp. 257–268, 2018.

D. Abdelhafiz, C. Yang, R. Ammar, and S. Nabavi, "Deep convolutional neural networks for mammography: advances, challenges and applications," BMC Bioinformatics, vol. 20, no. 1, p. 281, Jun. 2019. doi: 10.1186/s12859-019-2823-4.

S. Gu, M. Pednekar, and R. Slater, "Improve image classification using data augmentation and neural networks," SMU Data Science Review, vol. 2, no. 2, pp. 1–43, 2019.

K.-M. Lee, Q. Li, and W. Daley, "Effects of classification methods on color-based feature detection with food processing applications," IEEE Transactions on Automation Science and Engineering, vol. 4, no. 1, pp. 40–51, Jan. 2007.

R. Azadnia, A. Jahanbakhshi, S. Rashidi, and P. Bazyar, "Developing an automated monitoring system for fast and accurate prediction of soil texture using an image-based deep learning network and machine vision system," Measurement, vol. 190, p. 110669, 2022.

F. Xiao, H. Wang, Y. Li, Y. Cao, X. Lv, and G. Xu, "Object detection and recognition techniques based on digital image processing and traditional machine learning for fruit and vegetable harvesting robots: an overview and review," Agronomy, vol. 13, no. 3, p. 639, Mar. 2023.

Y. Kawano and K. Yanai, "Automatic expansion of a food image dataset leveraging existing categories with domain adaptation," in Computer Vision - ECCV 2014 Workshops, Cham: Springer International Publishing, 2015, pp. 3–17.

L. Bossard, M. Guillaumin, and L. Van Gool, "Food-101-mining discriminative components with random forests," in Computer Vision - ECCV 2014: 13th European Conference, Zurich, Switzerland: Springer International Publishing, 2014, pp. 446–461.

M. Shaha and M. Pawar, "Transfer Learning for Image Classification," in 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), 2018.

I. Gitman and B. Ginsburg, "Comparison of batch normalization and weight normalization algorithms for the large-scale image classification," arXiv preprint arXiv:1702.03118, 2017.

L. V. B. Beltrán, Visual and Textual Common Semantic Spaces for the Analysis of Multimodal Content, Doctoral Dissertation, 2021.

F. Fanjul-Vélez, L. Arévalo-Díaz, and J. L. Arce-Diego, "Intra-class variability in diffuse reflectance spectroscopy: application to porcine adipose tissue," Biomedical Optics Express, vol. 9, no. 5, p. 2297, 2018.

M. P. Kenardi, S. The, and R. Rahmania, "Self-attention approach for inter-class similarities of grocery product classification," in 2024 7th International Conference on Informatics and Computational Sciences (ICICoS), 2024, vol. 132, pp. 179–184.

J. He, L. Lin, H. A. Eicher-Miller, and F. Zhu, "Long-tailed food classification," Nutrients, vol. 15, no. 12, p. 2751, Jun. 2023.

K. Simonyan and A. Zisserman, "Very deep convolutional networks for large-scale image recognition," International Conference on Learning Representations (ICLR), San Diego, CA, USA, 2015.

K. He, X. Zhang, S. Ren, and J. Sun, "Deep residual learning for image recognition," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016, pp. 770–778. doi: 10.1109/CVPR.2016.90.

] Y. Wang, Y. Li, Y. Song, and X. Rong, “The influence of the activation function in a convolution neural network model of facial expression recognition,” Appl. Sci. (Basel), vol. 10, no. 5, p. 1897, 2020

L. Liu et al., "On the variance of the adaptive learning rate and beyond," arXiv preprint arXiv:1908.03265, 2019.